Welcome back to Beyond the Patterns. So today I have the great pleasure to announce that

we have a speaker by DeepMind, Petar Vlijchkevic. So Petar is a senior research scientist at

DeepMind. He holds a PhD in computer science from the University of Cambridge, Trinity

College, obtained under the supervision of Pietro Leo. His research interests involve

devising neural network architectures that operate on non-trivially structured data,

such as graphs, and their application in algorithmic reasoning and computational biology. He published

relevant research in these areas at both machine learning venues, NURBS, ICLR, ICMLW and biomedical

venues and journals, Bioinformatics, PLOS ONE, JCP and Pervasive Health. In particular, he

is the first author of Graph Attention Networks, a popular convolutional layer for graphs,

and DeepGraph Infomax, a scalable, local-global, unsupervised learning pipeline for graphs,

which was also featured in ZDNet. Further, his research has been used in substantially

improving the travel time predictions in Google Maps, which was covered by outlets including

CNBC, EndGadget, VentureBeat, CNet, The Verge and ZDNet. So, you see that Petar is a really

inspiring and popular researcher from DeepMind and it's a great pleasure to have him here

today and it's an even more great pleasure today because today is also the release date

of his book that he's co-offering with Michael Braunstein and other colleagues, Geometric

Deep Learning, which will be available just right after the recording of this presentation.

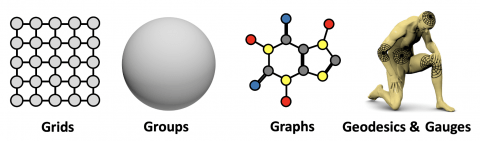

So the talk is also entitled after the book, Geometric Deep Learning, Grids, Graphs, Groups,

Geodesics and Gorgeous. So, Petar, I'm very much looking forward to the presentation and

the stage is yours.

Thank you very much, Andres, for the fine introduction and for inviting me to speak

to you all today. It is actually a great pleasure to be virtually speaking at Erlangen and I hope

that you will find my talk content interesting and a fun way to both synthesize interesting ideas in

machine learning and also to kind of link back to the early days of geometry. So, I'm starting this

talk in a bit of an unconventional form. I don't have a title slide yet. We're actually going to

start by going back in time and quite back in time like potentially 300 years BC or so in the time of

Euclid when the original foundations of geometry were first laid out and actually for a very long

time Euclid and his Euclidean geometry were the way to do geometry just generally within mathematics

and suddenly in the 1800s we experienced this boom of alternate geometries that were

proposed as an alternative to Euclidean geometry such as the hyperbolic geometry of Lobachevsky

and Boyai and the elliptic geometry of Riemann and with this big wealth of geometries being

proposed left right and center the 19th century was certainly a super exciting time to study

geometry but it also led people to question what is the one true geometry? There's all these

different geometries, they're all each individually consistent and they all lead to like individual

theories. What is the actual like unifying principle? What's the geometry that we should

be looking at and a solution for this problem, a very imaginative solution out of this problem

was given by a young professor by the name of Felix Klein in his inaugural talk at this very university

which subsequently became known as the Erlangen program. So in this inaugural talk Felix talked

about a blueprint that would allow us to eventually unify the geometries that were known and proposed

at the time using the lens of invariances and symmetries and using the group theory formalism

to kind of expressively talk about the links between different geometries and how we might

observe them. So in that sense it's very as I mentioned very exciting for me to be speaking

at this very university kind of linking back to the work of Felix Klein and it

shouldn't be, it cannot be overstated that this program had a very strong impact on geometry.

Eventually through the work of Eli Cartan in the 1920s all of these different geometries were

unified under this invariances and symmetry lens. It had a very effective spillover

effect in physics with the work of Emmy Noether and showing in Noether's theorem that all the

conservation laws in physics are completely derivable from symmetry and within theoretical

computer science the impact of the Erlangen program was quite magnificent. In fact the very

field of category theory in the words of the creators of category theory is that you can think

Presenters

Zugänglich über

Offener Zugang

Dauer

01:15:13 Min

Aufnahmedatum

2021-04-28

Hochgeladen am

2021-04-28 19:16:45

Sprache

en-US

It’s a great pleasure to welcome Petar Velikovi from Deep Mind to our Lab!

Abstract: The last decade has witnessed an experimental revolution in data science and machine learning, epitomised by deep learning methods. Indeed, many high-dimensional learning tasks previously thought to be beyond reach –such as computer vision, playing Go, or protein folding – are in fact feasible with appropriate computational scale. Remarkably, the essence of deep learning is built from two simple algorithmic principles: first, the notion of representation or feature learning, whereby adapted, often hierarchical, features capture the appropriate notion of regularity for each task, and second, learning by local gradient-descent type methods, typically implemented as backpropagation.

While learning generic functions in high dimensions is a cursed estimation problem, most tasks of interest are not generic, and come with essential pre-defined regularities arising from the underlying low-dimensionality and structure of the physical world. This talk is concerned with exposing these regularities through unified geometric principles that can be applied throughout a wide spectrum of applications.

Such a ‘geometric unification’ endeavour in the spirit of Felix Klein’s Erlangen Program serves a dual purpose: on one hand, it provides a common mathematical framework to study the most successful neural network architectures, such as CNNs, RNNs, GNNs, and Transformers. On the other hand, it gives a constructive procedure to incorporate prior physical knowledge into neural architectures and provide principled way to build future architectures yet to be invented.

Bio:Petar Velikovi is a Senior Research Scientist at DeepMind. He holds a PhD in Computer Science from the University of Cambridge (Trinity College), obtained under the supervision of Pietro Liò. His research interests involve devising neural network architectures that operate on nontrivially structured data (such as graphs), and their applications in algorithmic reasoning and computational biology. He has published relevant research in these areas at both machine learning venues (NeurIPS, ICLR, ICML-W) and biomedical venues and journals (Bioinformatics, PLOS One, JCB, PervasiveHealth). In particular, he is the first author of Graph Attention Networks—a popular convolutional layer for graphs—and Deep Graph Infomax—a scalable local/global unsupervised learning pipeline for graphs (featured in ZDNet). Further, his research has been used in substantially improving the travel-time predictions in Google Maps (covered by outlets including the CNBC, Endgadget, VentureBeat, CNET, the Verge and ZDNet).

Geometric Deep Learning Website:

https://geometricdeeplearning.com

Michael Bronstein's Blog Post on Geometric Deep Learning:

https://towardsdatascience.com/geometric-foundations-of-deep-learning-94cdd45b451d

Petar's Talk at Cambridge:

https://www.youtube.com/watch?v=uF53xsT7mjc

This video is released under CC BY 4.0. Please feel free to share and reuse.

For reminders to watch the new video follow on Twitter or LinkedIn. Also, join our network for information about talks, videos, and job offers in our Facebook and LinkedIn Groups.

Music Reference:

Damiano Baldoni - Thinking of You (Intro)

Damiano Baldoni - Poenia (Outro)